The contemporary landscape of scientific discovery and technological innovation places a premium on the swift and accurate retrieval of pertinent research and patent information. Researchers and R&D professionals continually seek instruments capable of optimizing their workflows and delivering dependable insights. While large language models such as GPT have garnered considerable attention for their conversational prowess, the critical endeavor of research often necessitates a more focused and precise methodology. Datascope emerges as a research tool meticulously crafted to furnish precise and validated information, thereby distinguishing itself from general-purpose chatbots.

Understanding the Fundamentals: GPT as a General-Purpose Language Model

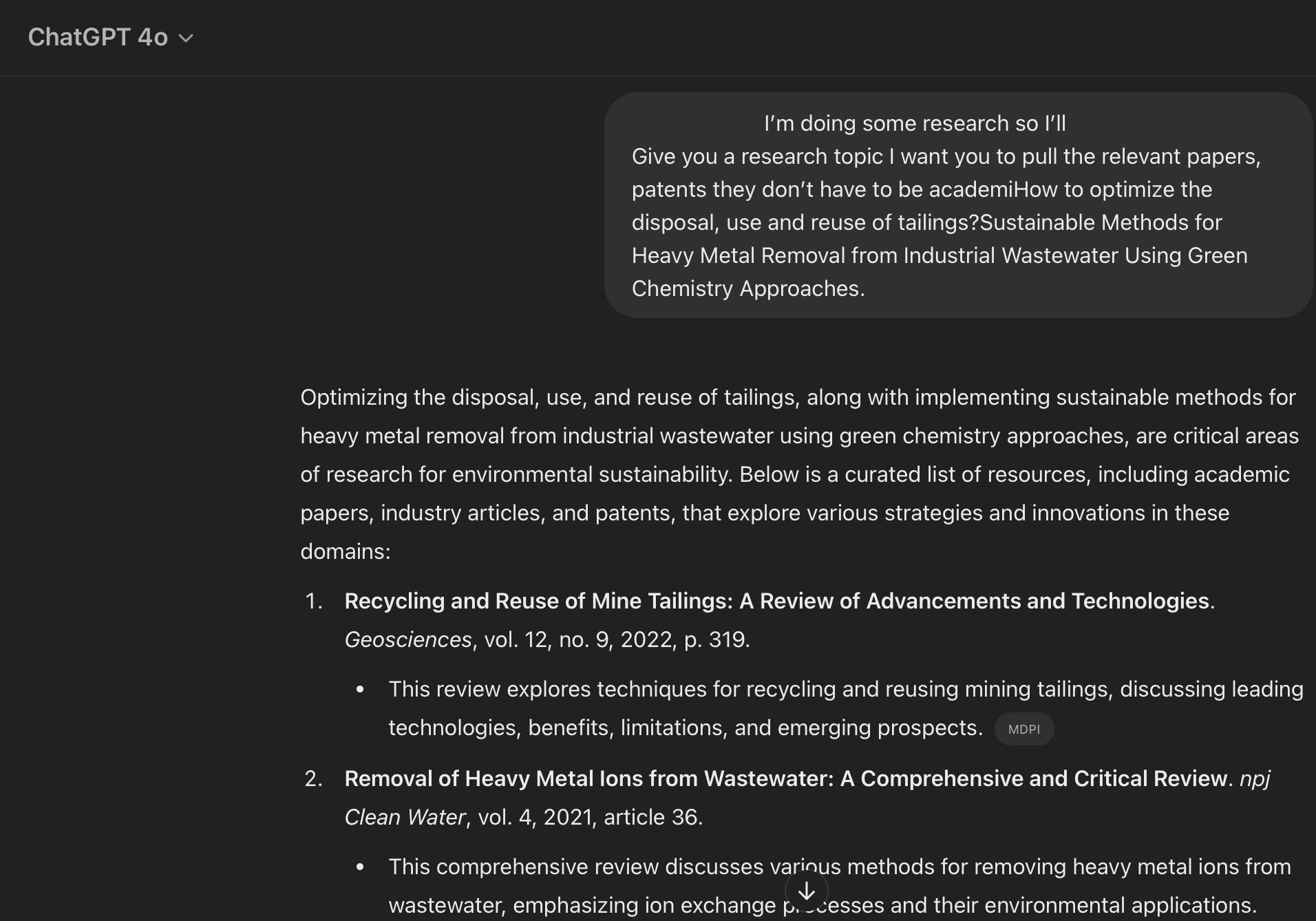

GPT operates as an open chatbot, drawing upon an extensive but at times unverified collection of data to generate text and engage in dialogue. Its architecture is designed to identify patterns within this vast corpus of text and code, enabling it to produce human-like text, facilitate language translation, and respond to a wide array of queries. This broad training equips GPT with a remarkable ability to synthesize and present information derived from its training data. However, a fundamental limitation arises in the context of rigorous research: its reliance on this static dataset, without direct, real-time access to authoritative sources, can compromise the currency and veracity of the information it provides. The strength of GPT lies in its capacity to connect disparate pieces of information within its training data and articulate them in a coherent manner. Yet, this very strength becomes a weakness when up-to-date and meticulously verified information is paramount, as is the case in scientific and patent research. While GPT can serve as a valuable asset for initial topic exploration or brainstorming sessions, its inherent lack of direct linkage to curated databases renders it less suitable for tasks demanding high accuracy and reliability, such as comprehensive patent searches or in-depth scientific literature reviews. The potential for generating plausible-sounding but factually incorrect information, often referred to as "hallucinations," further underscores its limitations as a primary research tool in domains where precision is non-negotiable.

Datascope: A Purpose-Built Research tool

In contrast to the broad capabilities of GPT, Datascope is not a general-purpose language model but a specialized platform specifically engineered to address the unique demands of researchers. Its core design principle revolves around providing direct access to verified and validated information sourced directly from reputable databases. This fundamental difference in architecture reflects a conscious decision to prioritize accuracy and reliability over the conversational breadth that characterizes models like GPT. Datascope's development signifies a growing recognition within the research of the inherent limitations of general-purpose AI tools when applied to the nuanced requirements of scientific and patent research. The increasing complexity and volume of information within these domains necessitate tools that are not only capable of processing vast amounts of data but also of ensuring the credibility and relevance of the information retrieved. Datascope's very existence speaks to the evolving landscape of research tools, where specialized platforms are emerging to complement and, in many cases, surpass the capabilities of broader AI models for specific research needs.

The Power of Verified Sources: Datascope's Data Advantage

A key differentiator between Datascope and GPT lies in their respective approaches to data acquisition and utilization. Datascope establishes direct connections to authoritative databases, including Google Scholar, the European Patent Office (EPO), and Cognition IP. This integration provides researchers with a significant advantage, granting them access to a wealth of peer-reviewed academic literature, official patent documentation, and specialized intellectual property insights. Accessing Google Scholar through Datascope ensures that researchers can tap into a vast collection of scientific knowledge, encompassing research papers, theses, and abstracts, all grounded in established scientific principles. Similarly, direct access to the European Patent Office (EPO) provides crucial resources for those involved in innovation and intellectual property, offering full legal texts, timelines, and classifications of patents. The inclusion of Cognition IP, a specialized IP database, likely furnishes additional valuable information regarding patent landscapes, legal standing, and competitive intelligence. This strategic reliance on specific, reputable databases ensures a markedly higher degree of accuracy and reliability in the information retrieved by Datascope compared to GPT, which draws from a far more extensive and potentially less rigorously vetted range of sources. The deliberate selection of these particular databases underscores Datascope's focus on supporting a broad spectrum of research activities, spanning from fundamental scientific discovery to technological innovation and its protection.

Beyond Keyword Matching: The Revolutionary Similarity Index

A standout feature of Datascope is its innovative Similarity Index, a powerful tool designed to provide precise relevance scoring for research documents Unlike traditional search methods and even basic keyword matching employed by large language models, the Similarity Index analyzes the content and context of patents and research papers in relation to the user's specific research question. It employs sophisticated algorithms to assess the degree of relevance and accuracy, presenting a percentage score that serves as a clear indicator of a document's pertinence. This capability directly addresses a significant challenge faced by researchers: the time-consuming process of manually sifting through numerous search results to identify truly relevant information. By providing a nuanced and accurate evaluation of document relevance, the Similarity Index offers a substantial improvement over simple keyword-based searches. The implementation of such a sophisticated feature suggests a deep understanding of the intricacies of the research workflow and the specific hurdles researchers encounter when attempting to extract meaningful information from vast repositories of data.

Time Savings and Enhanced Efficiency: A Practical Perspective

The practical implications of Datascope's Similarity Index are significant, particularly in terms of the time saved by researchers. Consider a researcher investigating a specific type of battery technology. Instead of laboriously reviewing hundreds of patents and publications that merely mention batteries, the Similarity Index can quickly identify and prioritize those documents that directly address the specific technology in question. This ability to filter out tangential or less relevant material allows researchers to concentrate their efforts on the most promising sources of information, accelerating their progress and enhancing their understanding. Similarly, for an IP analyst conducting a prior art search for a new invention, the Similarity Index can highlight patents with a high relevance score, dramatically reducing the time spent reviewing potentially irrelevant documents. This efficiency gain extends beyond mere time savings; it allows researchers to dedicate more of their valuable time to higher-level cognitive tasks such as analysis, interpretation, and the generation of new ideas. The ability to rapidly access and prioritize relevant information can have a profound impact on the overall pace of research and development, potentially accelerating the rate at which scientific discoveries are made and technological innovations are brought to fruition.

Comparative Analysis: When Precision Matters Most

When comparing Datascope and GPT in the context of research, the distinctions become particularly evident when considering specific research scenarios. For instance, in a critical task like a patent prior art search related to a groundbreaking invention, Datascope, with its direct access to the official patent databases of the EPO and its intelligent Similarity Index, is poised to deliver accurate and highly relevant patent documents In contrast, while GPT might furnish some text that appears relevant based on its training data, it lacks the direct connection to these authoritative sources and the sophisticated relevance assessment capabilities of Datascope. This could lead to GPT missing crucial patents or including irrelevant information, potentially compromising the integrity of the search. Similarly, in the context of a systematic literature review, Datascope's connection to Google Scholar, coupled with the Similarity Index, would enable researchers to efficiently identify and prioritize pertinent academic papers. GPT, on the other hand, might generate summaries of papers but could introduce inaccuracies or overlook key publications that are not adequately represented in its training data. The potential for inaccuracies or "hallucinations" in the information provided by GPT presents a considerable risk in research settings where the reliability of data is paramount. This underscores why specialized tools like Datascope offer a more trustworthy and dependable option for critical research tasks where precision is non-negotiable. While GPT might find utility in preliminary explorations or brainstorming due to its broad knowledge base, Datascope emerges as the preferred instrument for in-depth, critical research that demands validated information and a high degree of accuracy.

Embracing Precision for Research Advancement

In the critical domains of scientific and patent research, accuracy and efficiency are not merely desirable attributes but fundamental necessities. Datascope provides a robust solution that effectively addresses the inherent limitations of general-purpose AI tools by offering a research environment grounded in verified data and intelligent analysis. The Similarity Index represents a significant advancement, empowering researchers to conserve valuable time and concentrate on the most relevant information. By establishing direct connections to trusted repositories such as Google Scholar, the European Patent Office, and Cognition IP, Datascope ensures that research endeavors are built upon a solid foundation of accuracy and reliability. The adoption of specialized research tools like Datascope signifies a crucial step towards more rigorous and efficient research methodologies, ultimately contributing to the acceleration of knowledge and innovation across various scientific and technological fields. The future of research likely involves a strategic integration of both general-purpose AI tools for broader tasks and specialized platforms like Datascope for in-depth, precision-focused investigations, enabling researchers to leverage the unique strengths of each to optimize their workflows and achieve more impactful discoveries.

Table 1: Feature Comparison: Datascope vs. GPT

|

Feature |

Datascope |

GPT |

|

Core Functionality |

Specialized research platform |

General-purpose language model |

|

Primary Data Sources |

Curated databases (Google Scholar, EPO, etc.) |

Vast and diverse internet text and code |

|

Data Verification |

Direct access to authoritative sources |

Relies on patterns in training data |

|

Accuracy for Research |

High |

Variable, prone to "hallucinations" |

|

Relevance Assessment |

Sophisticated Similarity Index |

Primarily keyword-based |

|

Patent Information |

Direct access to official patent databases |

Limited to information in training data |

|

Scientific Literature |

Direct access to academic databases |

Limited to information in training data |

|

Time Efficiency |

High (due to Similarity Index) |

Moderate (requires manual filtering) |

|

Focus |

Precision and accuracy in research |

Broad text generation and conversation |

|

Suitability for Critical Research |

Highly suitable |

Limited suitability without verification |